Abstract

Background

With the growing demand in global media and Video-on-Demand (VOD) industries, efficiently producing high-quality 3D scenes has become an essential challenge. Traditional methods, such as physical set construction and 3D modeling by hand, are time-consuming and costly. While diffusion models have shown promise in generating stunning images, they often lack the flexibility and precision needed to meet specific industry demands.

Objective

SCENEDERELLA aims to democratize 3D scene creation by providing a user-friendly platform where anyone can iteratively refine their ideas through text-based prompts. Users can regenerate scenes until they find the one that matches their vision. Once satisfied, the system seamlessly converts the 2D scene into a fully realized 3D environment. This workflow ensures that both novices and professionals can translate their creative ideas into tangible, 3D representations with minimal effort.

Key Ideas

- Iterative Scene Refinement: Users can repeatedly regenerate 2D scenes based on their text prompts until the output aligns with their creative vision.

- Personalized Scene Generation: The system adapts to user inputs, ensuring outputs are tailored to individual preferences, whether for film, gaming, or advertising applications.

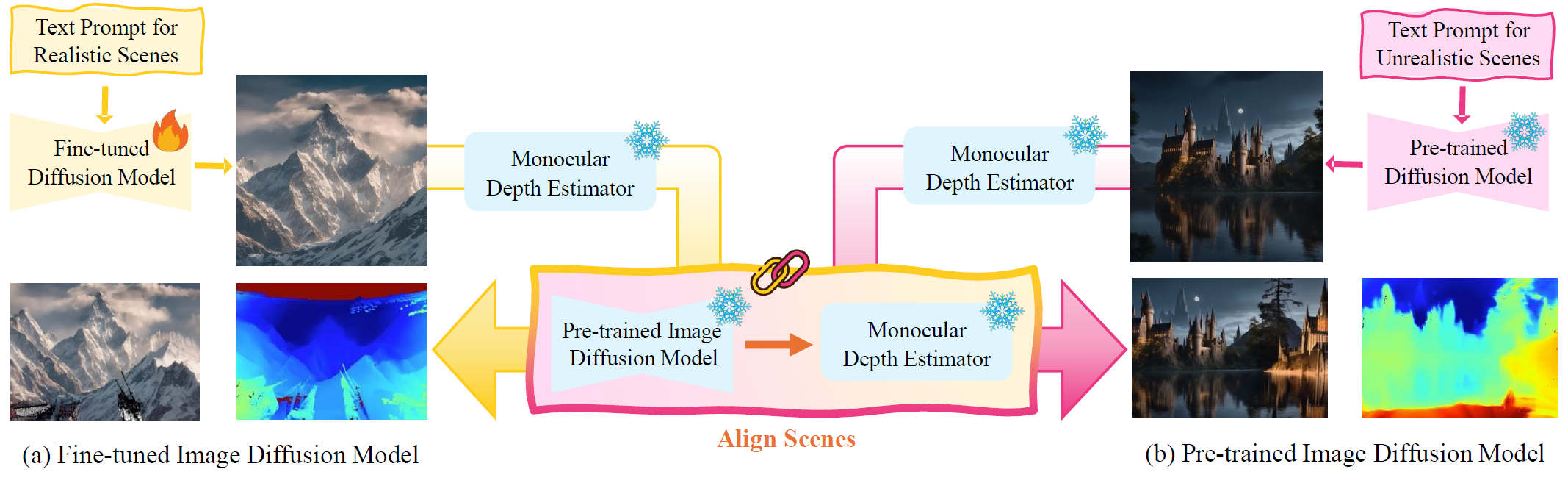

Figure 1: A Framework of SCENEDERELLA.

Figure 1: A Framework of SCENEDERELLA.

Acknowledgment

This work was partly supported by Institute of Information & communications Technology Planning & Evaluation (IITP) grant funded by the Korea government(MSIT) (2021-0-01341, Artificial Intelligence Graduate School Program(Chung-Ang University)) and the MSIT(Ministry of Science and ICT), Korea, under the Graduate School of Metaverse Convergence support program(IITP-2023(2024)-RS-2024-00418847) supervised by the IITP.

Citation

@inproceedings{lee2025scenederella,

title={SCENEDERELLA: Text-Driven 3D Scene Generation for Realistic Artboard},

author={Lee, Jungmin and Noh, Haeun and Lee, Jaeyoon and Choi, Jongwon},

booktitle={2025 IEEE International Conference on Consumer Electronics (ICCE)},

pages={1--6},

year={2025},

organization={IEEE}

}